Extreme Prepper for AGI

What the builder of $GOOG's breakout AI hit NotebookLM said to do

How you used NotebookLM before?

Remember back in the 2024s (gosh time contraction is in-effect) when NotebookLM came out and it surprised a niche group of us including myself on how seemingly overnight you can create your own podcast.

All you needed to do was upload some file(s) and wait a few minutes and out it comes with a man and a women bantering over the topics from your sources. It was miraculous for 2024 and perhaps even now. But I think today we’ll believe in anything when it comes to AI.

Raiza was/is one of the Product Managers who created NotebookLM from 0-to-1. Of the few people in the world (and more and more today), she’s an early adopter and an insider when it comes to AI.

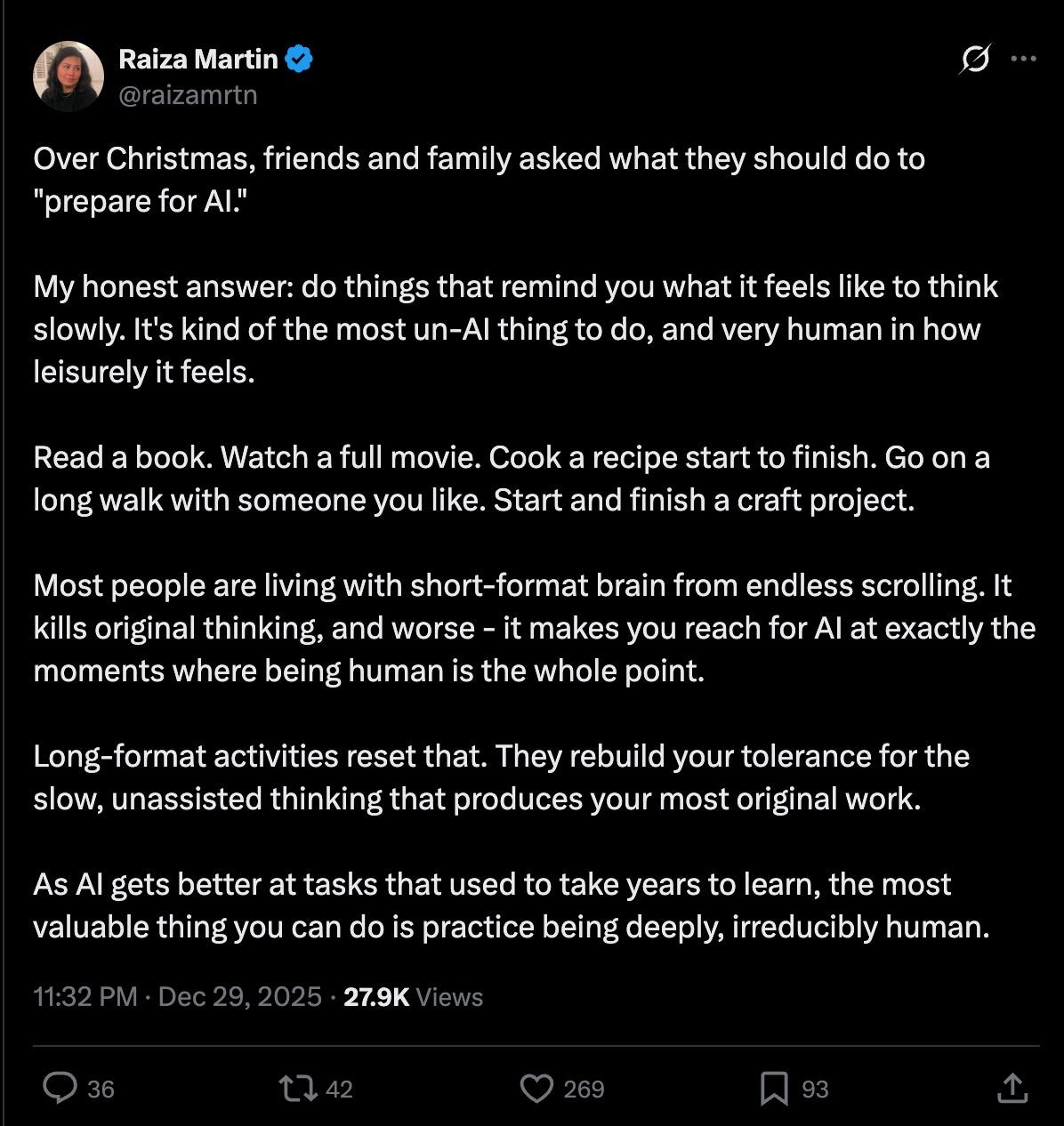

Since the builders and managers of any AI product who are even loosely attached to anything AI these days are the new soothsayers (though not a jab at Raiza herself), many people asked her over the Xmas break in 2025 what they should to to “prepare for AI”. Here is what she said:

It’s pretty self-explanatory.

And I’ve even written about this about how modern life, but especially since AI accent has create this counter-reaction in the form of Friction-maxxing.

AI is pushing our limits into the philosophical realm. It was always trending there anyway. From people who say we don’t need to save money for retirement anymore to people saying we no longer need “a job”.

Will we become a techno-feudalist, utopia or dystopian nihilistic world? Or all the above?

As Buffett and Munger has said, I better stick to my circle of competence. And that is loosely in the realm of being a student (literally) of the first industrial revolution, behavioural economics and $CSU. I’ll leave the philosophy to others.

Here’s are the key questions one should ask themselves if we want to stick to the business analysis when we think of GenAI on the impact of tools for small, medium and large enterprises (that Constellation Software serves):

Which tasks (rather than just looking at whole jobs) and workflows can be done more reliably than humans with AI? (in human factors engineering this is called function allocation)

In this new system (machine, robots and living breathing humans), what would be the best use of each and where will the hand offs reasonably take place to ensure an efficient system? (In the tech circles, I’ve often hear that we might create new bottlenecks now like creating fast code, but also burdening code reviews.)

In the case of non-functional requirements, how do we continue to ensure that reporting, compliance, regulatory requirements continue to provide assurance especially for mission-critical software like many $CSU companies.

And does AGI (when we truly get there), open up any new surface areas for human ingenuity and creativity that will otherwise be left undone?